Introduction to OpenCascade and CAD modelling kernels

This article is mostly a recording of the corresponding CAD programming lesson we published on our youtube channel. In this series, we cover the basic aspects of the geometric modelling discipline and give an introduction to the OpenCascade library from a practical perspective.

|

Welcome to CAD programming lessons. |

Today let's speak about the modelling kernels in general and the position of OpenCascade on this market.

|

Some interesting CAD kernels. |

OpenCascade is not alone, and if we list all other CAD kernels, we will get just a handful of libraries, which deserve some discussion, all written in C/C++ language. ACIS and Parasolid are the most well-known and respectable commercial kernels among them, and a lot of CAD modelling packages completely rely on them. For example, SolidWorks is based on Parasolid, while SpaceClaim is featured by ACIS. Then, there is Catia Geometric Modeller or, like they call it for marketing reasons, a Convergence Geometric Modeller, which is a backbone of Catia.

There is a couple of newcomer libraries. And the first one is the commercial kernel C3D (www.c3dlabs.com), which is a pretty remarkable software package backing the locally popular CAD system Kompas. Unlike other players, C3D developers publish their documentation without any restrictions, and they seem to compete with OpenCascade a lot struggling for a market share in the niche of affordable CAD engines.

Then we have RGK (www.rgkernel.com), which is a little bit phantom thing. It has been never released as a product you could license, but it's a very interesting project at the same time. RGK had stimulated the interest in CAD, it served as another boost for people to do some academic research around CAD and all these classic algorithms, such as Boolean operations. You might think that Boolean operations and other modelling functions are all old news and are largely resolved business. But that is not necessarily true. Since geometric modelling algorithms are evolutionary beasts, very often there is no just right or wrong way of implementing things, and a single modelling problem could be attacked in different ways. Of course, there are some state-of-the-art approaches, some strong and weak methods, and RGK lent itself as yet another attempt to revisit foundations and build up a full-featured CAD kernel from scratch. We cannot say that this project was a failed attempt because there's still nothing to test out, but neither could we say it's a success because of the same exact reason. Still, some interesting papers were published and our field has got a new breath with new people involved.

Then there is SMLib (www.smlib.com), and although it's not that popular, I wanted this library to be listed here. SMLib is remarkable in at least two aspects. The first thing is that SMLib is truly based on scientific state-of-the-art manuscripts. Actually, there's The NURBS Book behind SMLib, and SMLib implements the algorithms from this book. The NURBS Book remains the main reference for people implementing NURBS computations from scratch. Another pretty remarkable thing about SMLib is the way you license it. For other commercial libraries, as a rule, you only get the binaries and never sources. With SMLib, at least when I came across it for the first time, you could also get the sources. And this means that if the vendor company goes out of business, you are sort of protected.

But having access to sources is not only about legal issues. It is just pretty handy to be able to dig into sources to find out how to invoke one or another function. The best documentation in many aspects is the source code itself. Personally, I'd love to have sources just to have a better idea of the mechanics of what's going on under the hood. Sometimes, you could save tons of time by simply discovering that you invoke an API routine with incorrect arguments that, say, do not pass trivial contract checks. And this discussion about the availability of sources brings us naturally to OpenCascade.

OpenCascade is the only open-source kernel among the listed ones, and that's why we love it. Although, you might argue that OpenCascade does not stand out in terms of features, because in terms of features, indeed, it does not contain any breakthrough functionality. But as a matter of a fact, it simply works. And it allows us to design products in 3D for different engineering and not only engineering workflows. So let's go on to see what do we get with OpenCascade. But before we dive deeper into OpenCascade, I'd like to say a few words about the technology which is common to all the listed kernels.

|

CAD model representation schemes (CSG and B-rep). |

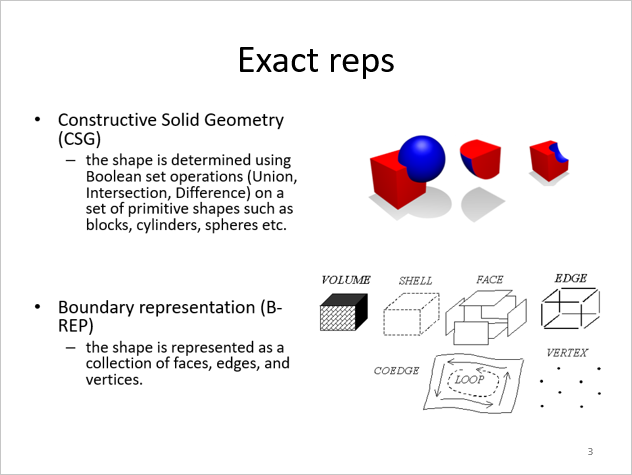

The first question you have to ask when you start modelling is how would you represent your object so that it is manageable by a computer? How would you digitalize your shape in a way that you could ask different geometric questions to your system and get some practical answers out of it? And the fundamental question here is whether a random point from an ambient space belongs to an object you're about to represent? Because if we could make our system answer this simple question, then we know, at least theoretically, the volume of space occupied by our shape. This question brings us to what is called the "Point Membership Classification" problem or, to put it briefly, PMC.

And that's not such a trivial question, because there's a multitude of ways how would you answer it. And there's one commercial-grade technology that is well-proven by decades of industrial use. It's the boundary representation or, simply, B-rep. But this state-of-the-art boundary representation thing is not unique.

To start off, there is another, older, technology named Constructive Solid Geometry or CSG. And in CSG, what you have is simply a list of primitives, defined analytically. For example, you have a sphere described by its centre and radius, or a box defined with its corner position and dimensions. And you end up with your final shape by putting these primitives in a tree which is a sort of a program or a declaration on how to build up your final geometry as a result of the Boolean operation series. There are pros and cons to this approach, and the main advantage of it is that it ensures that the final shape is sound and perfect, whatever you do with the operands. In the worst case, you'll simply get an empty result out of the CSG program. But if you combine your primitives in a way that does assume some result, then, in the end, you'll get a perfectly valid state of geometry, simply because there is no way how you would break this whole thing. Because you do not really evaluate the boundaries, you do not have these boundaries explicitly in memory. And you rather solve this PMC problem with respect to the CSG tree declaration. And, whenever there is no computation, you're safe.

We can say that, Ok, CSG is a sort of boutique technology, it's a sort of dead technology. But that is not an entirely correct statement. Because CSG has got another incarnation in the classical feature-based modelling systems, like SolidWorks for example. It still finds its niche. Because, what is a feature? Imagine a stock volume of a material where you'd like to drill a hole simply running a Boolean operation between the stock shape and the feature shape. Whatever representations these operand shapes have, you'll end up having a tree of Boolean operations or other modelling operations which still look a little bit like CSG. Because you need to store somewhere in your specification or, say, feature tree, the operand shapes and the relationships between them. So that, whenever you modify some design parameter, you get your CSG evaluated once again to produce a new shape.

But, Ok, the traditional CSG is not the best technology out there, because the most intuitive way of describing a shape is just describing all its boundary surfaces explicitly. And this is where boundary representation comes onto the scene. The very idea of boundary representation is to give exact mathematical equations for the surfaces that outline the exterior of your CAD part in 3D. We can speak a lot about the underlying data structures of B-rep, about how this whole shape representation is implemented by dividing it into topology and geometry, but all these are just the implementation details, while the main principle holds. Again, you just need to define the surface of your model, using the apparatus of computational geometry. This is where this whole modelling business starts to sound really scientific. We simply have a bunch of perfectly exact mathematical equations of all the surfaces and curves composing our model's boundaries. Using boundary representation we can easily sample our models getting all the points on the boundary without too much effort and any tricky numerical methods in there. But the point membership classification problem is still a thing, and you'll have to conduct quite tricky computations to classify a point with respect to the boundary: is a point inside, outside or lies on the boundary of a model? To know this, you'll have to do a sort of inversion because you are only equipped with the parametric equations of surfaces and that's not enough to reason about the enclosed volume. In this regard, B-rep is a bit less convenient than CSG.

|

CAD model representation schemes (facets and voxels). |

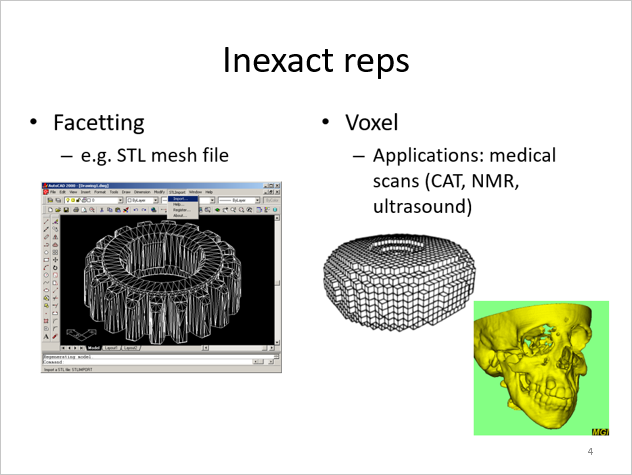

Then, there is another technique that is a sort of special case for boundary representation: the facet or mesh modelling. Here you still have the explicit set of boundary elements, but the elements themselves are way more simple. Here you narrow down your boundary representation to having just a set of primitives, like triangles or quadrangles. Like this, you can simplify the underlying data structures a lot. You do not need to have parametric equations for curves and surfaces anymore, as all you need is an unstructured grid, like a list of primitives composed of nodes coming in a certain order. You do not care much about the differential or local properties of your surface elements. And this is a very popular approach to modelling, especially, in the computer graphics domain, including gaming or sculpturing, because here you can end up with very efficient and robust modelling algorithms. The drawback of this modelling approach is pretty obvious. You model for shape, and you do not model for accuracy. The accuracy is sort of sacrificed for better efficiency, robustness and simplicity.

But, if you are interested in feature-based modelling scenarios, if you are interested in traditional CAD applications, such as CNC machining, for example, you will need to deal with features. Because you need to know the exact surface types to be able to generate milling paths. Or, you might want to run any sort of numerical simulation, where you'll have to switch to the finite element model of your shape, which cannot be easily deduced from mesh representation unless you have a link to the exact CAD model with all these perfect form features in there. With a pure mesh model, you are limited in precision, and you do not have the luxury of having an ideal shape representation, or, a sort of master shape, which can be used to generate all the derived representations. That's why this faceted representation is sort of secondary in the Computer-Aided Design field. And we can always generate facets in a certain level of detail, out of the precise boundary representation. But we cannot easily solve the reverse problem, because it starts to be a real challenge having no complete and automatic solution out there.

Then there's another scheme of shape representation which is voxelization. It's a sort of decomposition scheme, where you strive to describe not the object itself, but the piece of the ambient space it is embedded to. Here you can use different approaches, starting from plain and simple uniform decomposition, where you have a sort of 3D raster image, and ending up with more advanced decomposition schemes such as Adaptive Distance Field, for example. Such adaptive schemes are more compressed and allow for saving a great deal of memory and computational resources.

Well, there are some other approaches to shape representation, which are more exotic, and are not outlined here, on my slide deck. Just to give you a reference, you can look for what is called a functional representation or F-rep, but we are not going to speak about it a lot, because, after all, it's a niche technology that did not gain much popularity in the industrial applications. At least, let's not discuss it in this short introductory talk.

|

ISO 10303 (42) comes up with a choice for us. |

So, let's move on. How would we choose a shape representation scheme if there are so many of them? Well, fortunately, this choice was largely made for us, because there is this ISO 10303 (42) standard that poses boundary representation to be the ultimate way of describing a digital product. So if you want to stay compliant with a widely adopted standard, you'd go for a B-rep modeller.

And that's not surprising because boundary representation resembles old-schoolish drawings a lot. You know, these drawings composed of two-dimensional lines, and circles, and splines. These blueprints that are so popular in mechanical engineering. The 3D boundary representation scheme takes it to the next level by adding another dimension and eliminating ambiguities from the geometry it describes. Since you have materialized entities for the boundary elements, you can attach different attributes, such as dimensions and tolerances or even some design review notes right to the graphical entities you've got on a screen.

|

B-rep rocks. |

To sum up, boundary modellers dominate the CAD and CAM markets. And the main reason for that is that B-rep strikes just the right balance between computational efficiency and clearness to a human being. Boundary representation is a natural successor for drawings used by humans for centuries if not thousands of years. In this regard, B-rep is a trade-off giving enough convenience for both a computer and a human perception. Again, you can attach your attributes, tolerances, whatever, to something tangible instead of attaching them to all sorts of imprecise voxels or pieces of mesh for which you never know what features they really describe.

|

Okay, B-rep rocks, but... |

So boundary representation is good in all aspects, but it's actually too good to be true. And the problem here is that it is insanely hard to develop an efficient, robust and accurate boundary modelling kernel. It is extremely hard to build up a decent geometric modelling system. So we are not going to implement one, but what we are going to do is to get started with the OpenCascade kernel that comes up with all necessary features right out of the box. In the next lesson, we will speak about OpenCascade in a bit more detail.

Want to discuss this? Jump in to our forum.